The Evolution of Computer Programming Languages by dullhunk

Learning to code has become a heated topic these days. I read this critical article about the UK’s decision to teach children to code as part of the curriculum. Then there was this article about how tech skills are overvalued in today’s marketplace. And not to be outdone, there was this about how even if a person does get a degree in computer science, they might not have the skills companies want.

So, is learning to code important? If so, should it be a manditory class in elementary/secondary education? If someone wants to learn to code, should they teach themselves for free using the many sites online? What about attending a coding bootcamp? Does majoring in computer science at a college/university really give someone an edge when looking for a software engineering position?

Is Coding Important?

Let’s first define “important.” My definition of important is: useful, bordering on necessary to function in some capacity. In the developed world, I’m pretty sure most people would say coding is important. Think of all the things that depend on code for their functionality. Without code, there would be no cell phones and all those apps we love so much. There would be no jobs that use a computer. In our cars, the fancy touchscreens that tell us the traffic on our way to and from work—gone. The microwave, oven, dishwasher, and wifi connected television would be pretty much useless. The list goes on and on. People who knew how to code made these things possible. It’s important that many people know how to code since so much of the convenience of our daily lives depends on it.

Teaching Children To Code

The controversy about teaching programming in schools is really a controversy about a broader issue. Is what we teach children in school relevant in today’s modern world? Since coding is important in our technology-driven society, it seems relevent to teach it to children. But how many children, and when? Not every child is going to become a software engineer, so some would argue a computer science course should not be a mandatory part of the curriculum. Yet certain other courses are mandatory, like physical education and foreign languages, but children aren’t expected to become professional athletes or translators. Those types of courses teach children about healthy lifestyles and other cultures accordingly. Students must take them so they are “well-rounded.”

I’d argue computer science fits into that “well-rounded” objective. I was never exposed to computer science in school, so it was never on my radar to learn it. However, I was exposed to art, music, keyboard typing, woodworking, sewing, home economics, Spanish, German, and French in my middle school years (6 - 8). Why not add computer science to the list of “exposure courses.” Students should be exposed to writing code so they know what it’s like and what it can do. If students enjoy learning to program, they can take it as an elective in high school or even pursue it in college. Most schools around the world teach history, so students understand how the world they live in came to be. Computer science will also teach them how the world they live in came to be.

Learning to Code Through Online Tutorials

The number of websites that teach visitors to code is staggering. There’s Codecademy, Code School, and Code.org to name a few. There are also free online courses through sites like Udacity and MIT’s EdX that people can take to learn to code.

Before I started the MCIT program, I thought I’d give myself a heads-up before classes began and take the Python course on Codecademy. I went through each cutesy little exercise, but truth be told, I didn’t know what I was doing. Nothing really sunk in. I followed the directions, made guesses on the open-ended exercises, but I didn’t see the big picture about what I could really do with if statements and while loops. Making a tip calculator was nice, but too simplistic a view of what coding can really do. That’s my opinion. Maybe there are others for whom Codecademy opened doors to all the possibilities! It wasn’t for me (when I was a beginner). Note: If you already know how to code and want to learn a new programming language or framework, these websites are invaluable!

I believe in a more structured approach to teaching computer science. For the budget-conscious or for those who want to learn to code to move up in their career, taking courses through Udacity or EdX might be the way to go. It requires the user to be motivated, to put in the time to learn (much more time than it will take going through a Codecademy course), and to be ready for some difficult challenges. These courses are more thorough and will make students better prepared should they want to dive deeper into computer science.

Learning to Code By Going to a Bootcamp

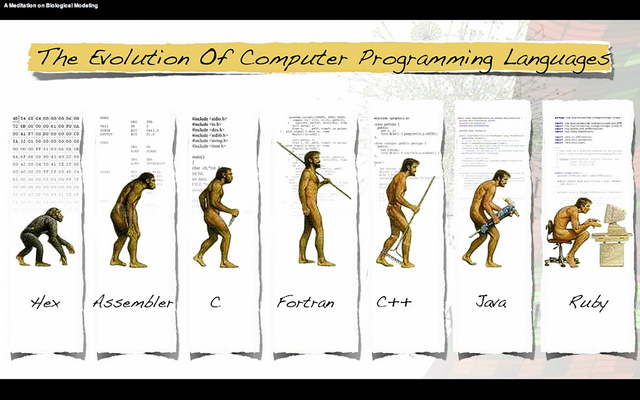

Coding bootcamps have started cropping up everywhere in the past few years. Click here to see an exhaustive list of them! Many of these bootcamps are specialized schools, focusing on one programming language or skill. They’re quicker than a college education and cheaper than one, too, but students only learn “skills employers want.” Some of these schools are partnered with companies that will hire graduates of the program - that’s great! But are the students coming out of these schools really prepared to work in the tech industry? Do they know about best practices like what data structure to use in certain situations? Do they practice any test driven development? Will they be relevant in later years when iOS has been replaced by a better mobile OS? What if Ruby becomes obsolete? Will the students of these bootcamps be able to adapt?

These bootcamps seem better for meeting short-term goals and for people who need structure to motivate them. So they may be a step up from online courses at Udacity and EdX, but their long-term success remains to be seen.

Learning To Code By Studying Computer Science

It’s interesting how college computer science courses have come under fire for not providing graduates the skills employers want. Employers want people with a broad assortment of technical know-how. It’s not just programming languages, but frameworks, databases, specific operating systems, version control systems, testing applications…the list goes on and on! Do colleges teach these skills or are they really more “theory driven?”

I can only speak about my experience studying computer science at the graduate level. The courses I’ve taken taught me four programming languages, a tad about version control using Git, and test driven development using JUnit for Java. I’ve also had courses that taught a lot of theory. I balked at having to take a Discrete Mathematics course. I’ll never be writing induction proofs ever again! Yet, the topic of relations came up in my databases course this week and I knew what that really meant because I learned all about it in Discrete Mathematics. Does it really matter that I understand how a relational database works? Maybe not, but it might come in handy somewhere down the line. I didn’t think knowing relations was important, but databases today wouldn’t be the same without it!

Theory is dismissed as fluff by critics, but they do not seem to understand that theory is what makes reality. People who learn theory can then apply it to real-world situations. Computational theory influenced Alan Turing who, in turn, theorized the Turing machine (which you can now make out of Legos…), and was the basis of all computing today. Brilliant people know both theory and current, practical skills. They can adapt because they see The Big Picture.

Employers may want people with skills, but they also want people with experience. Knowing this fact will help you choose a good college with a good program. Colleges cannot teach students everything for the workplace, because employers vary widely in what they do! That’s why it’s important to choose a university that balances theory with practical applications and provides internship opportunities to gain experience in the field.

For these reasons, employers may value someone with a college degree in computer science more highly than someone with the same skillset without a degree. Someone who really understands the ins-and-outs of computer science might be able to adapt to changes in technology more easily than someone who has been self-taught. But employers keep their options open. There are many programmers out there without a college education who are doing great work. It’s clearly not the end of the world to know how to code and not have a college degree in computer science. However, it may be more difficult to find a programming position considering many large companies require their developers to have a computer science degree.

Final Thoughts

Learning to code is important, if only to understand how modern technology works. If you’re interested in coding, there are tons of online resources, workshops, bootcamps, and colleges ready to help you on your way. Choose what’s best for your style of learning and level of motivation. There is no one right way to learn how to code. There’s only your way.